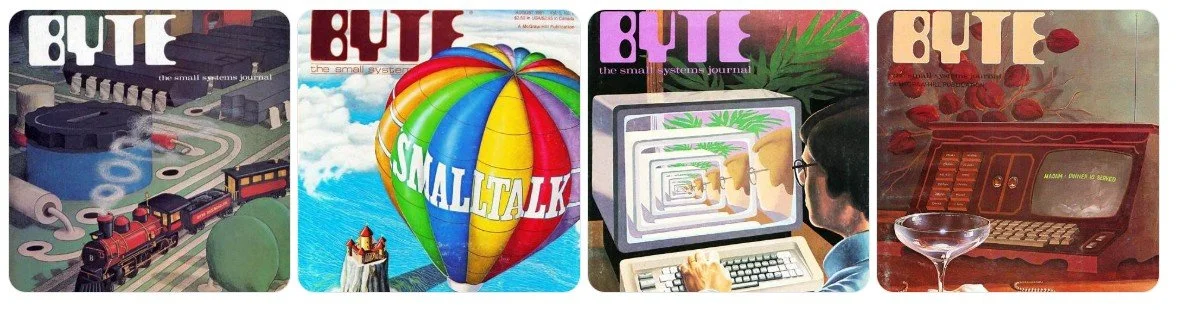

Robert Tinney: A Piece of Public Imagination Vanishes

Byte magazine artist Robert Tinney, who illustrated the birth of PCs, dies at 78. The significance of this obituary isn’t just “a beloved illustrator died.” It’s that a major piece of the public imagination of early personal computing has just formally passed into history. Tinney helped invent the visual language of personal computing.

AI Legislation Weather Report: This Week in “Please Stop Letting Robots Talk to Kids Like That”

If the bill numbers in Transparency Coalition’s weekly roundup make your eyes blur, that’s normal, and it’s also the wrong way to read it. This isn’t “AI regulation” in the abstract. It’s a rolling, state-by-state effort to write product-safety rules for the places AI is already touching everyday life: chatbots that impersonate humans, tools that can manufacture sexual content, systems that can imitate your face and voice, and—most urgently—interfaces designed to keep kids engaged.

Think of this update as an AI weather report:

What moved this week

What’s building pressure, and

What’s likely to become enforceable next?

There won’t be a single national “AI law” that arrives all at once. There’s a patchwork forming in real time, and the pattern matters more than the bill numbers.

Holding the Line @ Backchannels

I’m joining 4S Backchannels—the Society for Social Studies of Science blog for shorter, timelier, media-rich STS scholarship—and my first piece is live: “Holding the Line: Values Drift, AI Anomia, and the Craft of Accountable Leadership.” 👇

Invisible work, long before AI

Invisible work has always been here. It is the creative contribution behind the great man’s invention, the editor who makes the Nobel prize possible, the unnamed colorists and inkers in pre‑digital comics and animation whose hands trained a generation’s visual imagination.

Bruises Beneath Brocade

What does it mean that some of the most exquisitely sensitive films about constraint and moral courage—films that taught many viewers to notice class cruelty, emotional repression, and the quiet heroism of small acts—were created inside a system that often asked others to keep quiet, endure, and carry on?

Tenant 3: Stewardship is theater.

By now, the true innovation of our era may be in the choreography of virtue: the panel, the audit, the dashboard, the values campaign, all staged to prove responsibility without ever surrendering control. It is easy to mistake more rituals for more care. But anyone who has sat through a post-mortem town hall or glossy transparency memo knows how often these spectacles exhaust dissent instead of enabling it. What passes for transparency is frequently a technology for managing consequence, not sharing it: failure is scripted into apologies and metrics while the machinery of extraction not only survives, but earns fresh legitimacy.

Tenant 2: From Whistleblower to Collective Veto

After years of solitary warnings, the center of gravity has shifted from whistleblowing to organized constraint. Since 2018, the recent stewardship strikes have moved from the U.S. global tech hubs and European labor venues. Google employees’ revolt against Project Maven marked a pivot from ethics as branding to ethics as veto; the global walkout the same year demonstrated that action could move policy. By 2020, internal protests over the treatment of AI ethics researchers made the stakes explicit. In 2023, Hollywood turned talk into contract, codifying consent and compensation for AI use.

Tenant 1: Visibility Before Progress

The manifesto set the terms for what comes next: stewardship that refuses both corporate gloss and mindless hype, insisting that change begins with clarity and accountability, not just aspiration. If you missed it, the manifesto lays out what real stewards do before the world scales new systems: they demand jurisdiction, contest the defaults, and protect the right to slow things down until harms are truly visible and priced.

AI as a Period Movie set

Period films taught us to notice the fine grain of class and constraint. Who opens the doors, who carries the trays, whose feelings must be swallowed so that another character’s moral awakening can unfold in peace. The AI products that now saturate daily life are built on a similarly stratified stage, where some workers appear in the credits (engineers, founders) and others remain offscreen (moderators, annotators, ghostwriters, and translators) despite being essential to the performance.

Stewardship for the 99%: A Manifesto

These are not merely two different keynote styles: they’re two power structures fighting over the terms of AI use and stewardship. Used here as illustrations of common narratives, the argument concerns systems and incentives, not individuals. On one side, Big Tech scale-first camp (and its admirers) treats AI as the engine of extraction-led growth. Progress in this narrative means shipping models fast, pushing telemetry into every role, and celebrating access to the same tools that, in the next breath, are used to justify head-count cuts, normalize surveillance as productivity, saturate information spaces with synthetic media, and push energy and water costs onto the public. It is blitz-scale automation as civic virtue, asking people to be grateful that where managers once monitored, an automated, polished, and fluent dashboard now does the watching, scoring, and judging.