Taxonomy v Folksonomy

The concepts of taxonomy and folksonomy hold significant implications, especially in the context of emerging technologies like OpenAI. While traditional taxonomies offer structured hierarchies of knowledge, allowing for a systematic approach to information organization, folksonomies represent a more fluid and emergent way of categorizing information based on user-generated tags and metadata.

However, the challenge arises when technological advancements fail to incorporate divergent thinking and promote groupthink through convergent taxonomies. This phenomenon is particularly evident in language models, where developers' linguistic and cultural biases can influence the interpretation and representation of (the dominant) language.

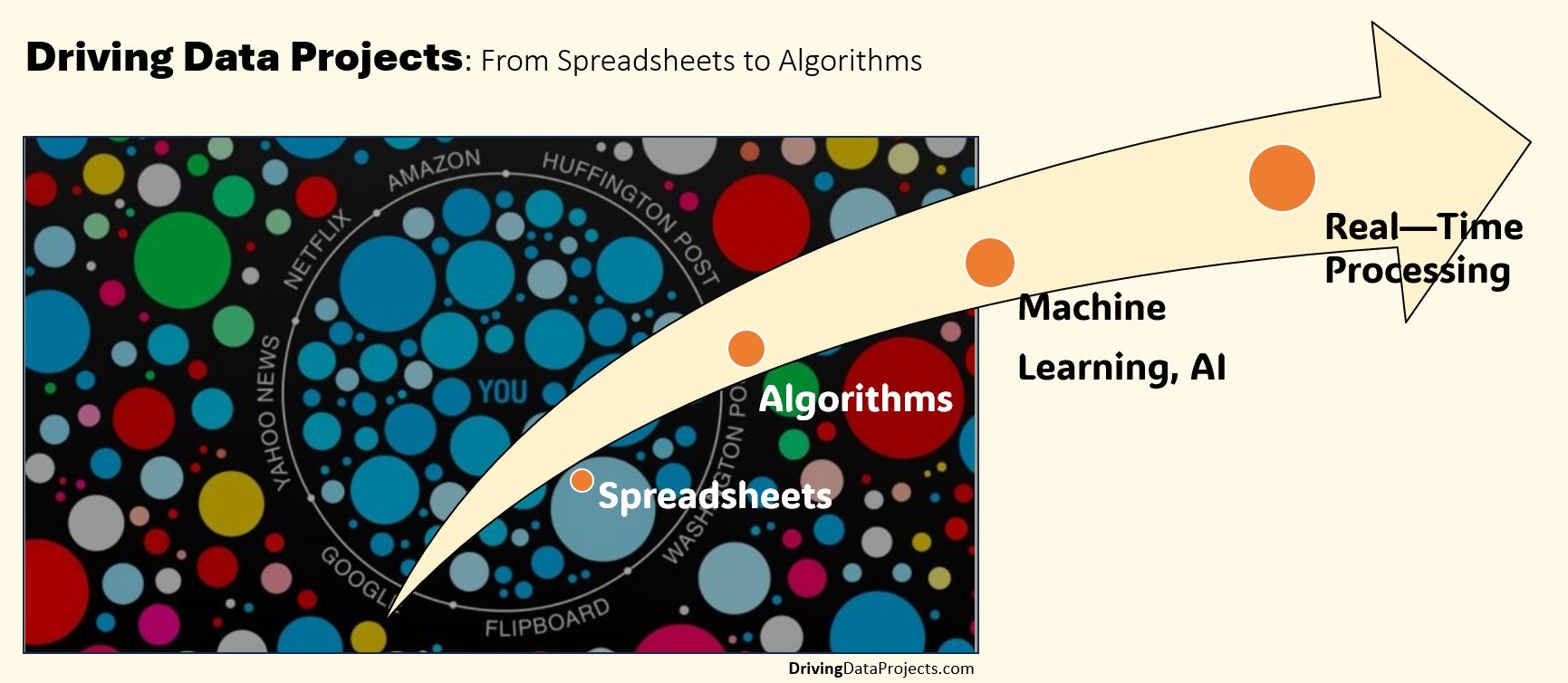

Data Trend: From Spreadsheets to Algorithms

The transition from traditional spreadsheets to sophisticated data management and analysis algorithms represents a significant evolution that has revolutionized how businesses process and leverage information. Algorithms have reshaped the landscape of data-driven decision-making. Facebook's filter bubble is an early example of a machine learning system individualizing the user experience based on user patterns.

Who is pacing this race?

Employees have been encouraged to ‘automate their roles’ to demonstrate self-direction and continuous learning. In the past, an employee's skills, motivation, and business interests determined the pace of change. Soon, the pace may be beyond their control, risking job loss before they can adapt to consider the next set of problems. If they can’t find problems faster than the pace of automation, they are not adequately prepared for transition.